Project Overview

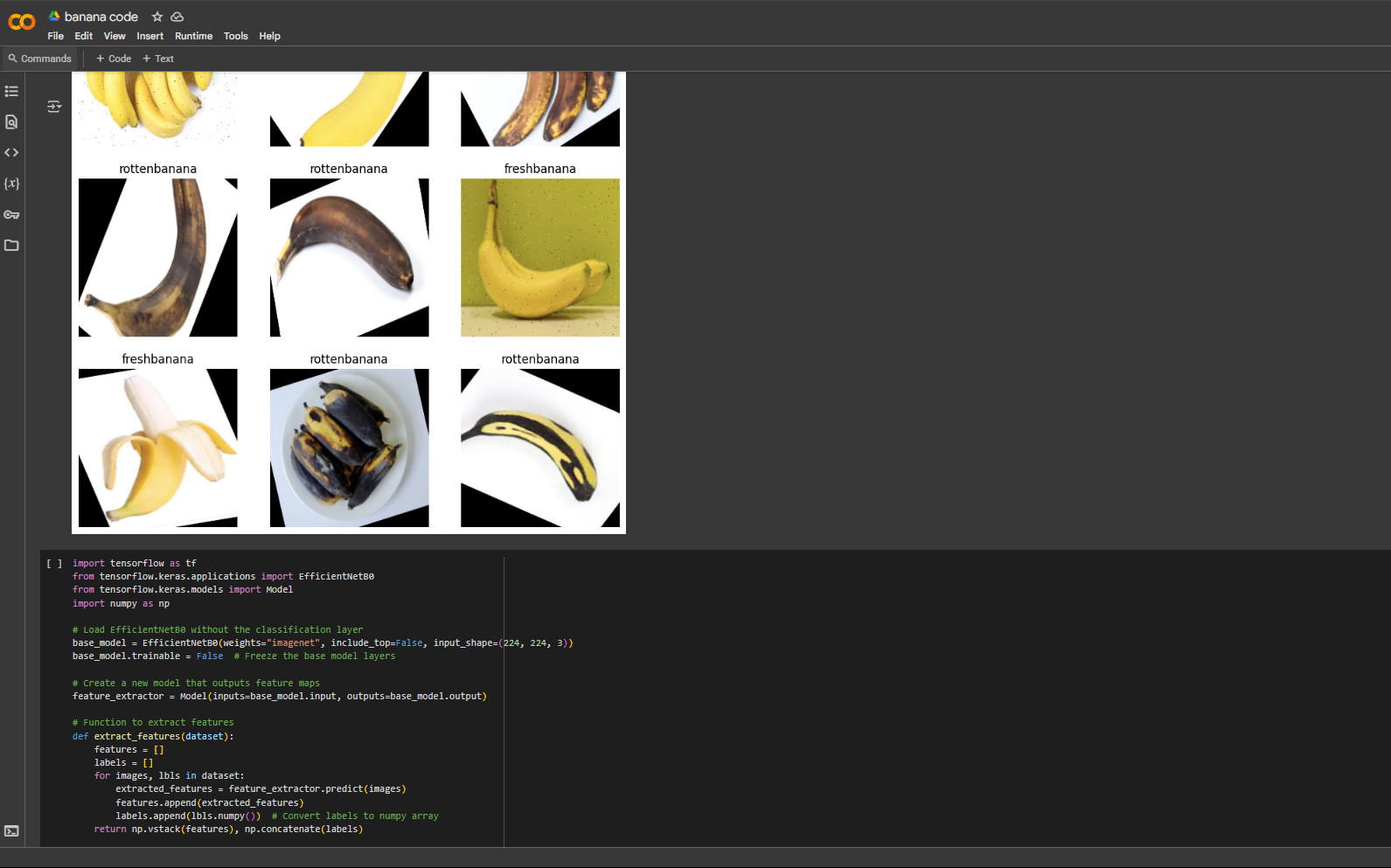

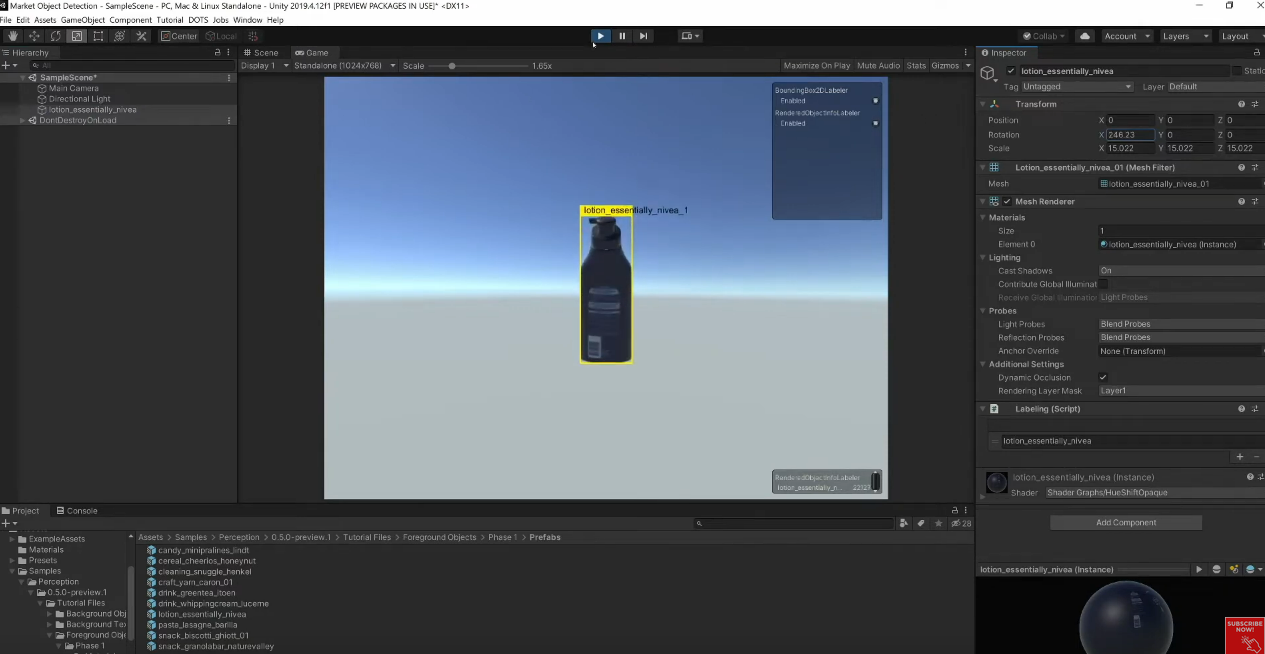

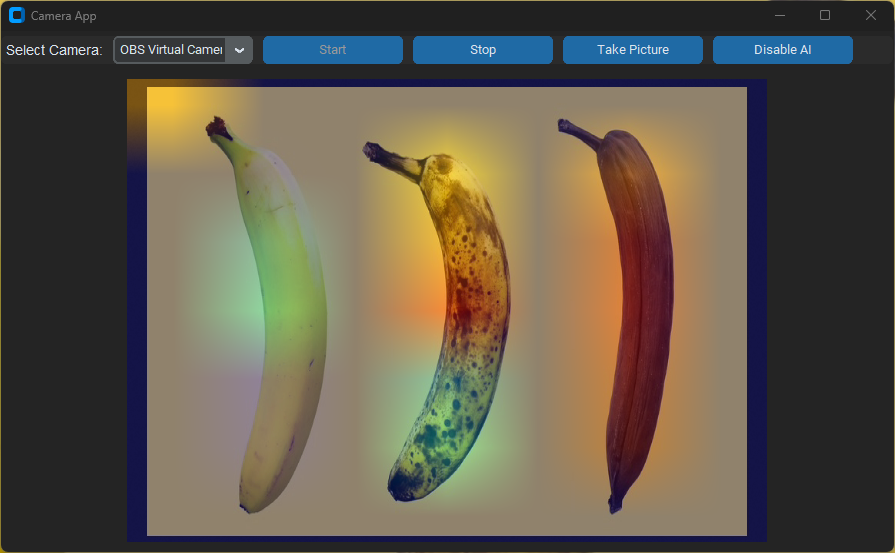

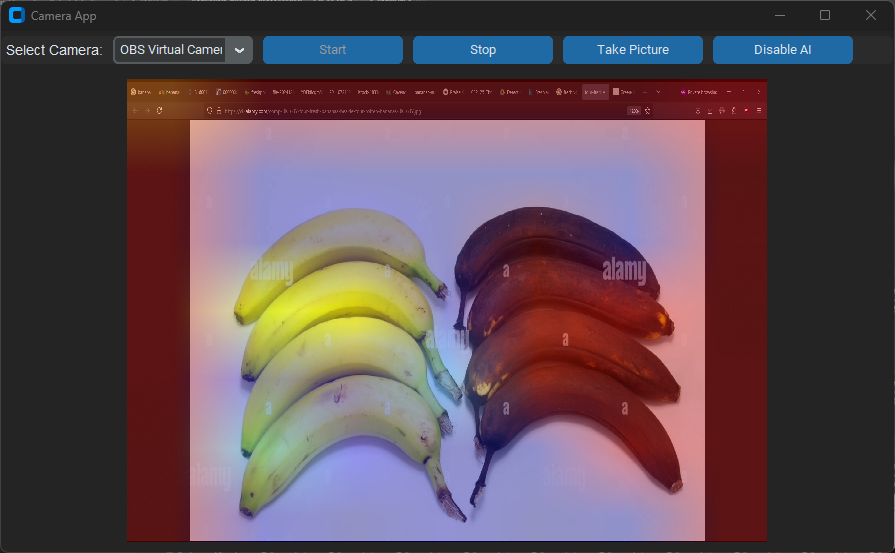

Our project, Anomaly Detection in Augmented Reality Environments, explores the intersection of machine learning and synthetic data generation. We developed a real-time anomaly detection system capable of identifying irregularities in physical environments through augmented reality interfaces. By combining Unity3D's powerful simulation tools with TensorFlow's efficient modeling capabilities, we demonstrate how lightweight deep learning models trained on synthetic datasets can be deployed for real-world anomaly detection across industries like manufacturing, logistics, and quality control.